EMOCA

Emotion Driven Monocular Face Capture and Animation

Radek Daněček, Michael J. Black, and Timo Bolkart

Computer Vision and Pattern Recognition (CVPR) 2022, New Orleans, Louisiana

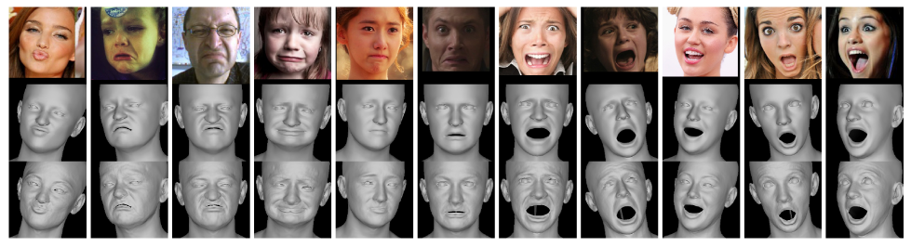

EMOCA regresses 3D faces from images with facial geometry that captures the original emotional content. Top row: images of people with challenging expressions. Middle row: coarse shape reconstruction. Bottom row: reconstruction with detailed displacements.

Abstract

As 3D facial avatars become more widely used for communication, it is critical that they faithfully convey emotion. Unfortunately, the best recent methods that regress parametric 3D face models from monocular images are unable to capture the full spectrum of facial expression, such as subtle or extreme emotions. We find the standard reconstruction metrics used for training (landmark reprojection error, photometric error, and face recognition loss) are insufficient to capture high-fidelity expressions. The result is facial geometries that do not match the emotional content of the input image. We address this with EMOCA (EMOtion Capture and Animation), by introducing a novel deep perceptual emotion consistency loss during training, which helps ensure that the reconstructed 3D expression matches the expression depicted in the input image. While EMOCA achieves 3D reconstruction errors that are on par with the current best methods, it significantly outperforms them in terms of the quality of the reconstructed expression and the perceived emotional content. We also directly regress levels of valence and arousal and classify basic expressions from the estimated 3D face parameters. On the task of in-the-wild emotion recognition, our purely geometric approach is on par with the best image-based methods, highlighting the value of 3D geometry in analyzing human behavior. The model and code are publicly available.

Video

More Information

- pdf preprint

- supplemental pdf

- code

- EMOCA Project page at MPI:IS

- For questions, please contact emoca@tue.mpg.de

News

- 02/23: EMOCA v2 is out! The new model has better lip sync, alignment with landmarks and eye closure.

- 04/22: Supplementary video public

- 04/22: Code, trained models and preprint publicy available

- 03/22: EMOCA is accepted to CVPR 2022

Referencing EMOCA

@inproceedings{EMOCA:CVPR:2021,

title = {{EMOCA}: {E}motion Driven Monocular Face Capture and Animation},

author = {Danecek, Radek and Black, Michael J. and Bolkart, Timo},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {20311--20322},

year = {2022}

}